AI-Powered Email Phishing: The Growing Cybersecurity Threat for Enterprises

Introduction: The New Age of Phishing

Phishing has long been the most successful attack vector for cybercriminals. But with the rise of artificial intelligence (AI), email phishing has become smarter, faster, and harder to detect. Traditional spam filters struggle against AI-generated content, and employees are increasingly falling victim to messages that appear authentic.

For enterprises in healthcare, finance, and other regulated industries, this trend presents a critical challenge: how to safeguard against AI-enhanced phishing campaigns that exploit human trust.

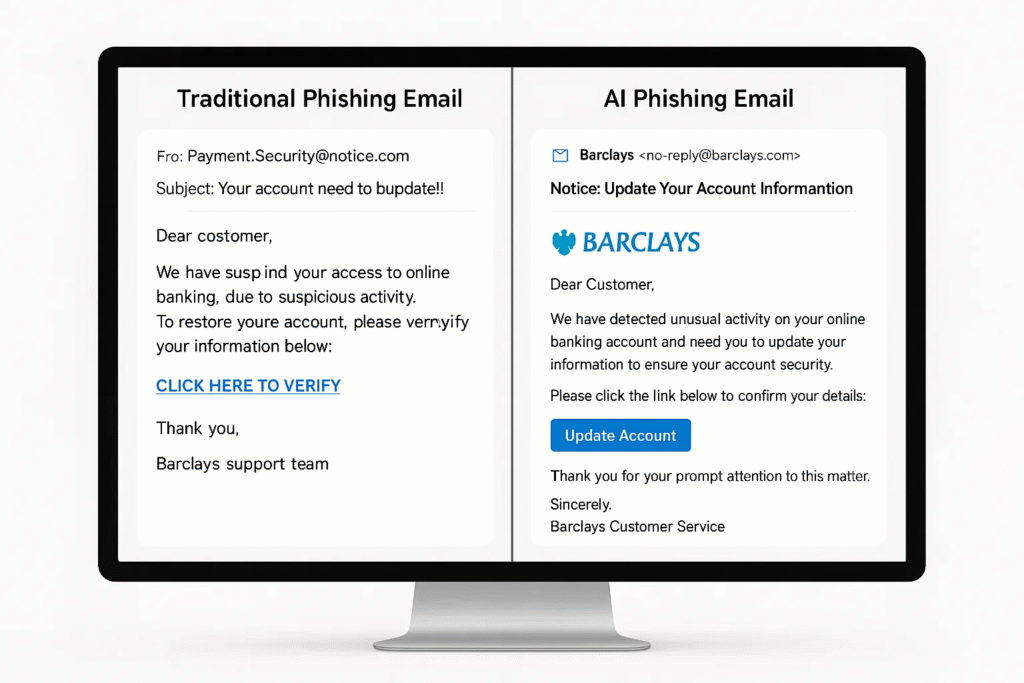

What makes this evolution so alarming is the shift from mass-scale scams to hyper-targeted deception. In the past, phishing emails often gave themselves away with obvious spelling errors, generic greetings, or suspicious links. Today, AI phishing emails can mimic internal corporate communication with uncanny precision. They can reference current projects, imitate executive tone, and even adapt industry jargon. What once took a skilled attacker days to craft can now be generated at scale in seconds.

The implications are staggering. According to industry surveys, over 90% of successful cyberattacks begin with a phishing attempt, and AI is multiplying that risk. Instead of sending out thousands of identical emails and hoping for one or two clicks, attackers can now create thousands of unique, highly convincing emails designed to bypass filters and trick even vigilant employees.

The rise of AI in phishing campaigns also signals a broader transformation in cybercrime: the automation of deception. Just as enterprises leverage AI to streamline operations and enhance customer experience, cybercriminals are applying the same technology to weaponize communication. An AI phishing email doesn’t just look real—it can learn from failures and improve over time. If one style of email is blocked, AI can instantly adjust its tone, subject line, or content to bypass defenses on the next attempt.

For industries bound by strict compliance regulations like HIPAA in healthcare or PCI DSS in finance, the stakes are even higher. A single successful AI phishing email can lead to unauthorized access to sensitive data, massive fines, reputational damage, and loss of customer trust. In sectors where protecting personal and financial data is non-negotiable, the cost of failure is far more than financial—it’s existential.

Another critical shift is the erosion of human intuition as a defense mechanism. Employees have been trained to look for red flags—typos, strange sender addresses, suspicious links—but AI is rapidly eliminating these telltale signs. With natural language generation tools, an attacker can produce emails indistinguishable from legitimate ones, leaving employees with little more than gut instinct to rely on.

This arms race between attacker and defender is intensifying. Enterprises can no longer depend solely on traditional training programs or outdated spam filters. Instead, they must adopt AI-powered defenses, continuous monitoring, and incident response strategies tailored for the age of AI-driven phishing.

The new age of phishing is not just about tricking users—it’s about weaponizing trust at scale. And unless organizations act decisively, AI phishing emails will continue to exploit the most vulnerable link in the security chain: human behavior.

What Is AI-Powered Email Phishing?

AI phishing refers to the use of advanced technologies like machine learning and natural language processing (NLP) to craft deceptive emails that closely mimic legitimate communications. Unlike traditional phishing attempts—often riddled with grammar errors, generic greetings, and suspicious links—AI-generated phishing emails are far more sophisticated.

They are:

- Contextual and Personalized – AI can scrape publicly available data from LinkedIn, company websites, press releases, or even breached databases to create highly targeted content.

- Highly Convincing – Modern NLP models replicate natural sentence structure and professional tone, imitating corporate communication styles with near perfection.

- Scalable – A single attacker can automate thousands of unique, context-aware emails in minutes, each customized for its recipient.

Real-World Examples of AI Phishing Emails

- The Fake HR Notice

Imagine receiving an email from your HR department with the subject line: “Action Required: Updated Remote Work Policy – September 2025.” The email includes a link to a “policy document” that looks like it’s hosted on the company’s SharePoint site. In reality, it’s a malicious login page designed to harvest your credentials. What makes this dangerous is that AI can mimic your HR manager’s tone and even reference actual company events scraped from public news or LinkedIn posts. - The CFO Wire Transfer Scam

Traditionally, attackers might send a poorly written message pretending to be the CFO. But with AI, the phishing email can perfectly replicate the CFO’s writing style, signature block, and even reference recent financial reports. Employees in accounts payable could easily mistake it for a legitimate request, leading to fraudulent fund transfers. - Vendor Impersonation

Many companies work with third-party vendors. AI tools can scrape details about supplier relationships and generate invoices or “payment reminders” that look identical to authentic ones. Because these emails are personalized and reference actual services, employees may process them without question. - Customer Support Scams

AI phishing emails can impersonate IT or customer support teams, sending employees messages such as: “Your corporate email account requires security verification—log in here to avoid suspension.” These emails often use logos, branding, and formatting so convincing that even cautious employees might fall for them.

Why This Matters

Traditional phishing emails were relatively easy to spot, often triggering red flags like strange grammar or obviously fake sender addresses. But AI phishing emails blur those lines. By leveraging deep learning models, attackers can generate flawless messages at scale, making each phishing attempt feel uniquely tailored.

For enterprises, this means the old training advice—“look for typos” or “check the sender name”—is no longer enough. AI-driven phishing campaigns are pushing the limits of deception, creating a threat landscape where every inbox message could potentially be a weaponized email.

The danger lies not just in one successful click but in the automation of success. With AI, attackers can continuously test subject lines, wording, and attachments to determine which variations yield the highest response rates. Over time, the system “learns” to craft more effective phishing campaigns, making defenses that much harder.

AI-powered phishing is not just an evolution of cybercrime—it’s a revolution, turning once-clumsy scams into precision-guided social engineering weapons.

Why AI Phishing Is Harder to Detect

Traditional phishing detection relies heavily on pattern recognition. Spam filters flag common traits, misspelled words, known malicious domains, or repeated phrasing. But AI phishing emails disrupt this model by creating endless variations that look legitimate and adapt in real time.

1. Natural-Language Accuracy

AI-driven phishing emails don’t just avoid spelling mistakes; they sound authentically human. Models like GPT can analyze writing styles and generate fluent, context-aware communication that mirrors corporate tone. For example, an email to a law firm might use formal, legalistic phrasing, while one to a tech company might adopt a casual, collaborative tone. This flexibility allows phishing emails to pass linguistic checks that would flag traditional scams.

2. Personalization at Scale

Previously, personalization was a resource-intensive process, attackers had to research individuals manually. Now, AI automates this, scraping public and dark web data to create customized phishing templates for thousands of employees simultaneously. Instead of sending “Dear user,” an AI phishing email might address the recipient by name, mention their department, or reference a recent company event. This level of personalization makes employees feel the message was intended specifically for them, lowering suspicion.

3. Speed and Adaptability

Perhaps the most alarming feature of AI phishing is feedback-driven evolution. When a campaign fails, attackers don’t simply abandon it. AI models analyze which links were clicked, which emails bypassed filters, and which were blocked. This data feeds back into the system, enabling rapid iteration. Within hours, a phishing campaign can relaunch in a smarter, stealthier form.

4. Bypassing Legacy Defenses

Most enterprise security solutions still rely on signature-based or rules-based detection. Because AI can generate infinite, unique variations of phishing content, these traditional defenses quickly become obsolete. A malicious email generated today may look entirely different from one sent just yesterday, making blacklists and keyword-based filters ineffective.

5. Exploiting Trust Signals

AI can also manipulate signals that security systems and employees rely on for legitimacy. For instance, attackers can generate near-perfect spoofed domains, copy brand styling, or even create convincing internal email chains. This abuse of trusted markers makes detection even more complex, since both humans and machines are trained to trust familiar visual cues.

In short, AI phishing emails don’t look like threats, and that’s exactly why they’re so dangerous. They blend seamlessly into normal workflows, making detection a constant challenge for both employees and enterprise security systems.

The Impact on Enterprises

Financial Services

In the financial sector, trust and accuracy are everything, and AI-driven attacks exploit both. Malicious messages often appear to come from regulators, senior executives, or partner institutions. These can request “urgent compliance checks” or “wire transfer approvals.” Because artificial intelligence can mimic industry language and even reference recent financial reports, the deception feels authentic. The outcome? Fraudulent transactions, stolen account credentials, and costly regulatory penalties. For large institutions, the damage can reach millions within hours, while for smaller firms, a single compromise may permanently erode client confidence.

Healthcare

Healthcare organizations face a unique challenge: protecting sensitive patient data while ensuring uninterrupted care delivery. AI-generated social engineering emails frequently impersonate IT staff or medical suppliers, urging clinicians to log into “updated portals” or download “critical test results.” When successful, these schemes can expose patient records, deploy ransomware, or even disrupt connected medical devices. Beyond severe HIPAA fines, the reputational fallout can drive patients to seek care elsewhere. In high-stakes scenarios, delayed record access due to compromised systems can also put lives in jeopardy.

SMBs and Startups

Small and midsize businesses are especially vulnerable because they often lack advanced detection tools or in-house security expertise. A startup CFO may receive what looks like a legitimate vendor invoice, or an employee might unknowingly share cloud login credentials. Without a dedicated security operations center (SOC), these compromises often go unnoticed until the damage is done. For SMBs, the consequences are often existential: data loss, revenue disruption, and reputational collapse. Unlike larger enterprises, smaller organizations may not have the financial cushion to recover.

The Psychology Behind AI Phishing Emails

Cybersecurity has always been about people as much as it is about technology. What makes AI phishing emails so dangerous is their ability to exploit the psychological triggers that drive human decision-making. Attackers use AI not only to generate grammatically correct messages but also to fine-tune them for maximum psychological impact.

For instance, AI systems can analyze patterns in social media posts, company announcements, or even public earnings calls to craft emails that match the emotional state of their target. Imagine an employee reading a well-timed email about “mandatory layoffs” right after seeing news of company restructuring on LinkedIn. The emotional response—fear and urgency—makes them more likely to click a link or download a malicious attachment.

Some of the most common psychological tactics amplified by AI include:

- Urgency & Scarcity – Messages like “last chance” or “immediate action required” force snap decisions.

- Authority Bias – AI mimics the tone and style of executives or regulators, making requests seem legitimate.

- Reciprocity & Trust – Personalized “thank you” emails or gift card scams appear genuine when AI tailors them with specific details.

- Curiosity – AI can generate subject lines that provoke intrigue, such as “Internal investigation results attached.”

The sophistication here is scale. A single hacker can run A/B tests on thousands of email variations, measuring which tone—fear, trust, or reward—gets the highest click-through rate. Over time, AI learns the most effective psychological hooks for specific industries or even departments.

This creates a feedback loop where phishing emails become increasingly convincing, blurring the line between real and fake communication. For enterprises, this means cybersecurity awareness training must evolve from generic “spot the typo” lessons to nuanced simulations that prepare employees for AI-enhanced psychological manipulation.

How AI Phishing Emails Are Evolving in 2025

The year 2025 marks a turning point in the cyber threat landscape. Attackers are no longer relying on static playbooks; instead, they are using adaptive AI systems that evolve in real time. This makes AI phishing not just a one-time threat but an ongoing, self-improving danger.

Here are some ways AI phishing emails are evolving this year:

- Deepfake-Enhanced Emails

Attackers are embedding AI-generated voice notes or videos that mimic executives. For example, an email might include a deepfake video of the CFO instructing employees to transfer funds. The multimodal aspect—email plus video—dramatically increases credibility. - Contextual Awareness Through Data Mining

Modern AI can scrape company calendars, press releases, and even GitHub commits to craft emails relevant to current events. Imagine receiving a phishing email about a “new compliance policy update” the same day your company actually launched one. - Adaptive Campaigns

When phishing attempts fail, attackers feed that data back into the model. If an industry blocks certain phrasing, the AI will instantly adapt and generate new variations that bypass filters. - Industry-Specific Attacks

In healthcare, AI phishing emails may pose as patient intake forms. In finance, they may mimic wire transfer approvals. The specificity is designed to evade suspicion by blending seamlessly into daily workflows. - Integration with Other Cyber Attacks

AI phishing is increasingly the entry point for ransomware or supply-chain breaches. Once credentials are harvested, attackers deploy secondary payloads, turning a single email click into a full-scale incident.

By 2025, AI phishing emails are no longer “scattershot.” They are precision-guided attacks that evolve faster than traditional security tools can adapt. This arms race between attacker AI and defender AI is defining the next decade of cybersecurity.

For enterprises, the shocking reality is this: every employee with an inbox is now a potential AI phishing target, and every ignored security investment widens the attack surface.

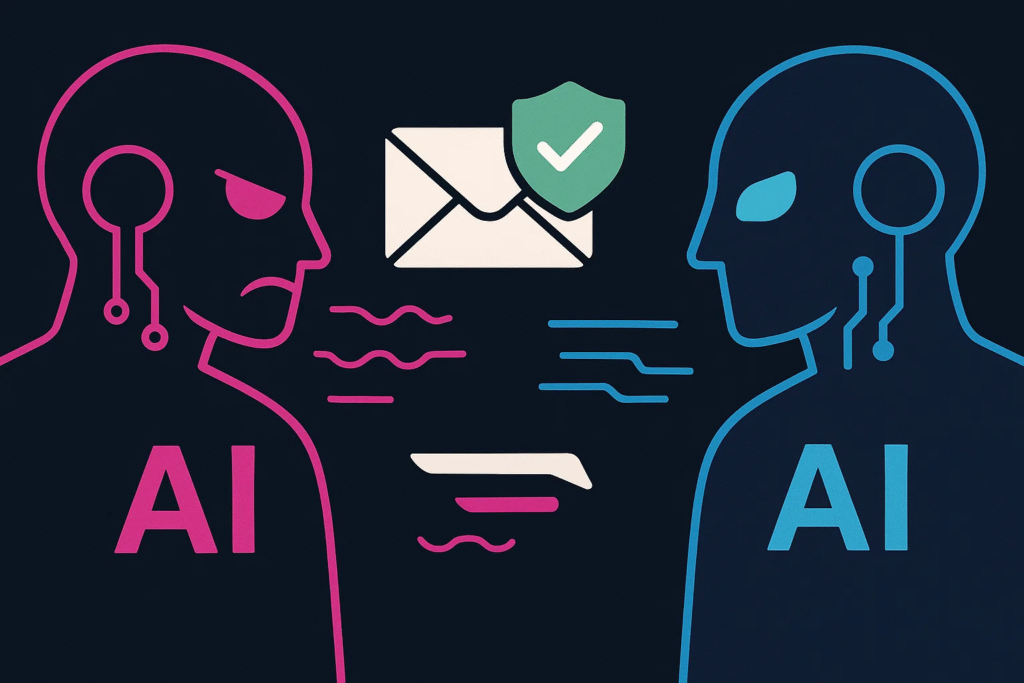

Future Outlook: The Coming AI vs. AI Cyber Battle

The rise of AI-generated email threats signals a fundamental shift in cybersecurity. What was once a battle between human attackers and human defenders is quickly evolving into an arms race between artificial intelligence systems on both sides. On one hand, criminals are leveraging machine learning to craft deceptive, personalized messages at scale. On the other, enterprises are beginning to adopt AI-driven defenses that can analyze communication patterns, detect anomalies, and flag potential threats in real time.

This shift means that the future of security will rely heavily on automation, behavioral analytics, and intelligent filtering. Tools capable of spotting subtle irregularities—like a change in writing style, unusual timing of messages, or context mismatches—will become essential. Likewise, new forms of generative AI detection will help distinguish between human-written content and machine-produced messages.

But no organization can fight this battle alone. Stronger collaboration between MSSPs, MSPs, and enterprise teams will be critical to staying ahead. By sharing threat intelligence, coordinating incident responses, and continuously training systems against emerging tactics, defenders can close the gap that attackers are exploiting.

For industries like healthcare and finance, where the risks extend beyond financial loss to regulatory penalties and reputational harm, this investment isn’t optional—it’s a necessity. The enterprises that thrive in this new age will be those that view AI not just as a business enabler, but as a strategic defense weapon against the very threats it now fuels.

In the end, the message is clear: organizations must adapt quickly. The future of cybersecurity won’t be decided by humans alone—it will be determined by which side’s AI is smarter, faster, and more resilient.

👉 Protect your organization from AI-powered phishing. Request a cybersecurity consultation today .request a consultation with FusionCyber today

Featured links:

Why Fusion Cyber Group Is the Best MSSP + MSP Partner for Healthcare & Medical Practices

Protecting Your SMB: Where to Start & How an MSSP Can Help

Managed Cybersecurity Solutions for SMBs

Verizon 2024 Data Breach Investigations Report

FAQ:

What makes AI-generated email attacks different from traditional phishing?

Unlike older scams filled with typos and poor grammar, AI-driven messages use natural language processing to mimic professional tone, reference real events, and personalize content at scale—making them much harder to detect.

Why are healthcare and financial services especially vulnerable?

Both sectors handle sensitive data and high-value transactions. A single compromised email can lead to regulatory penalties, identity theft, or massive financial losses, making them prime targets.

How do attackers use AI to increase success rates?

Attackers run automated A/B tests on thousands of emails, adjusting subject lines, tone, and timing. AI models learn from each failure and continuously refine tactics to bypass defenses.

Can small businesses be targeted too?

Yes. Startups and SMBs are often hit hardest because they lack advanced security infrastructure. Without a dedicated SOC or MSSP partner, a single breach can be catastrophic.

What defenses are available against AI-driven email threats?

Enterprises are adopting AI-powered filtering, behavioral analytics, and MSSP/MSP partnerships. These defenses detect anomalies, stop credential theft, and respond quickly to incidents.

Our Cybersecurity Guarantee

“At Fusion Cyber Group, we align our interests with yours.“

Unlike many providers who profit from lengthy, expensive breach clean-ups, our goal is simple: stop threats before they start and stand with you if one ever gets through.

That’s why we offer a cybersecurity guarantee: in the very unlikely event that a breach gets through our multi-layered, 24/7 monitored defenses, we will handle all:

threat containment,

incident response,

remediation,

eradication,

and business recovery—at no cost to you.

Ready to strengthen your cybersecurity defenses? Contact us today for your FREE network assessment and take the first step towards safeguarding your business from cyber threats!