AI Agents Need Identity Controls to Prevent Costly Breaches

The Rise of AI Agents in Business

Artificial Intelligence (AI) has evolved from chatbots and analytics tools into AI agents—software programs that can act autonomously on behalf of humans. These agents schedule meetings, triage support tickets, write code, and even make security decisions.

Unlike traditional software, AI agents are not limited to a single workflow. They learn, adapt, and make decisions in real time. An AI customer service agent, for example, can respond to client requests, issue refunds, and escalate complex cases without waiting for human approval. In IT operations, agents can monitor logs, patch vulnerabilities, or reconfigure network settings automatically. For many businesses, this efficiency translates into reduced costs, faster service delivery, and better customer experiences.

But here’s the risk: without proper identity and access controls, AI agents can accidentally—or maliciously—access sensitive data, misconfigure systems, or create compliance liabilities. An agent with too much access might pull confidential payroll records when asked to “summarize employee expenses,” or it could copy sensitive client data into unsecured systems during an integration task. These are not far-fetched scenarios—they are already being reported in early adopter environments.

The distinction between human and non-human identities is blurring. Traditionally, businesses have managed user accounts for employees, contractors, and partners. Now, they must also govern “machine identities” created for bots, service accounts, and AI agents. The scale of this challenge is significant. According to cybersecurity analysts, machine identities are already outpacing human ones by a factor of 3 to 1, and the rise of autonomous AI will accelerate that imbalance.

Deloitte predicts that 25% of companies will pilot AI agents this year, with half adopting them by 2027. Gartner has issued similar forecasts, warning that “non-human actors” will be a leading source of cyber incidents if identity controls are not strengthened. For SMBs (small and midsize businesses), this shift is particularly important. They often deploy cloud-based AI solutions without a dedicated identity governance framework, assuming these tools are “safe by default.” In reality, most AI platforms place responsibility for access management on the business itself.

This rapid growth demands an urgent rethink of cybersecurity. AI agents are not a futuristic concept; they are entering day-to-day workflows now. Leaders must ask: Who controls these agents? What systems can they access? How is their activity monitored? Without clear answers, organizations risk trading efficiency gains for costly breaches, regulatory fines, and reputational damage.

Why Identity Matters For AI

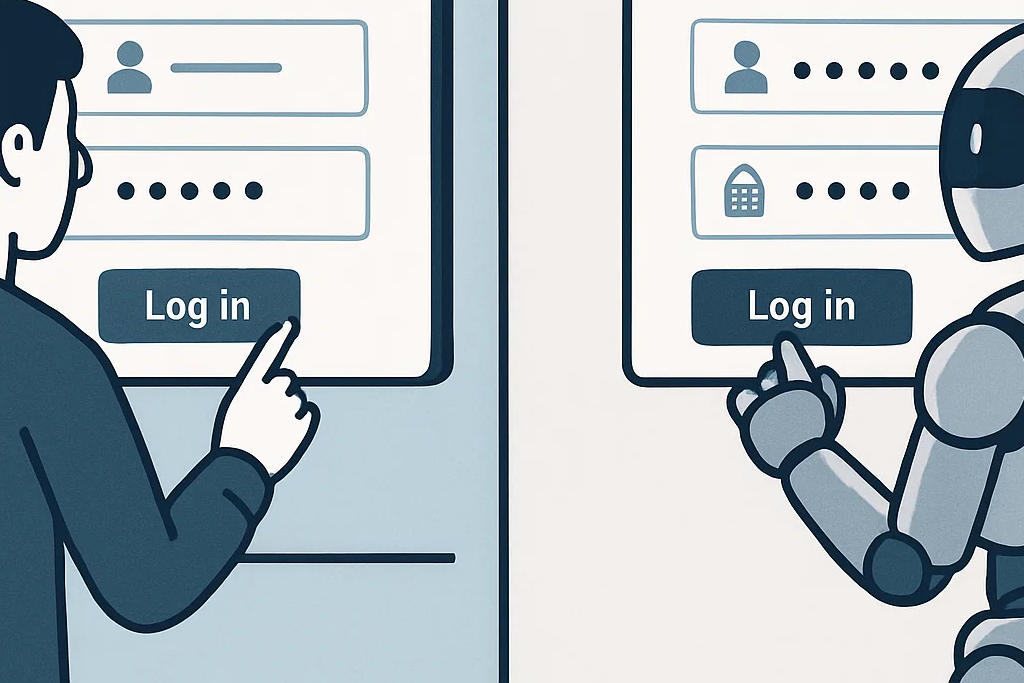

Just like employees, AI Agents need clearly defined roles, access rights, and accountability. Treating AI Agents as “just another tool” leaves businesses dangerously exposed. These intelligent systems act independently and, in many cases, make decisions faster than humans can review. Without strong identity governance, organizations have no reliable way to track or restrict what AI Agents actually do.

The Hidden Scale of Access with AI Agents

AI Agents rarely operate in isolation. They connect to calendars, email systems, financial tools, CRMs (Customer Relationship Management platforms), and collaboration apps. Every one of these connections is a potential doorway into sensitive information. An AI Agent tasked with “improving efficiency” may begin pulling data across multiple systems, unintentionally creating shadow databases or exposing files to third-party platforms. Unlike human staff, who log in at predictable times, AI Agents can run 24/7. This constant presence magnifies the risk of misuse—whether accidental or malicious.

Data Leakage Beyond Human Error

Most organizations already worry about human mistakes—sending the wrong attachment, misaddressing an email, or oversharing in cloud folders. AI Agents raise the stakes. A poorly configured AI Agent might “learn” from confidential client contracts and then reuse those details in unrelated responses. Once exposed, sensitive data cannot be recalled. Worse, attackers increasingly target AI Agents, knowing that they often hold aggregated knowledge of business operations.

Shadow AI Agents and the Governance Gap

Employees are adopting unauthorized tools faster than IT teams can approve them, creating “shadow AI Agents.” This means sensitive data may be processed in uncontrolled systems with no monitoring or retention policies. Even well-intentioned staff can introduce risk by pasting confidential text into a consumer-grade AI Agent to “save time.” Without formal identity controls, organizations cannot enforce which AI Agents are trusted, how they authenticate, or what they are allowed to handle.

Compliance and Legal Exposure with AI Agents

Privacy laws make no distinction between human and non-human actors. Under Quebec’s Law 25, GDPR in Europe, and other frameworks, businesses must safeguard personal information—whether accessed by an employee, contractor, or AI Agent. Regulators have already signaled that lack of oversight for AI Agents could constitute negligence. Non-compliance brings heavy fines, loss of customer trust, and in some industries, restrictions on operating licenses.

In short, AI without identity is a breach waiting to happen. Non-human identities must be governed with the same rigor as human ones. Defining roles, enforcing least-privilege access, and continuously monitoring activity are no longer optional—they are foundational to doing business safely in an AI-driven economy. Organizations that ignore this shift may gain short-term productivity, but they also inherit long-term liability that can far outweigh the benefits.

What Good Looks Like

Forward-looking businesses are applying Zero Trust principles to AI. Instead of defaulting to open access, they verify every request an agent makes. The core philosophy is simple: never trust, always verify. This applies equally to humans, machines, and AI agents.

Building a Secure Foundation

A secure approach includes:

- Non-Human Identity (NHI) Management – Each AI agent must be treated as its own user. Assigning unique credentials prevents one compromised agent from opening the door to all others. Think of it as giving every AI its own ID badge.

- Role-Based Access Control (RBAC) – AI should only access the minimum systems and data required for its role. An AI tasked with scheduling meetings doesn’t need access to payroll or customer contracts. Keeping permissions narrow reduces the blast radius if something goes wrong.

- Multi-Factor Authentication (MFA) – While people use text codes or apps, bots can use certificate-based authentication or API keys tied to MFA processes. This ensures attackers can’t hijack an AI agent by stealing a single password.

- Monitoring & Logging – Every action taken by an AI agent should be tracked. Logs provide accountability and help investigators understand what happened if a breach occurs. Continuous monitoring also detects abnormal behaviour in real time.

- Revocation Protocols – If an AI begins acting outside of policy—pulling too much data, making unauthorized changes—its access must be cut immediately. Rapid revocation is the digital equivalent of disabling a lost keycard.

These are not futuristic concepts—they’re implementable today with the right managed security stack. Businesses that adopt these controls now can embrace AI with confidence, avoiding the costly mistakes seen in organizations that rushed adoption without proper safeguards. Put simply: the companies that combine innovation with governance will be the ones that win trust, stay compliant, and protect long-term business value.

The Business Risks Without Controls

When AI agents operate without identity and access controls, the consequences extend far beyond technical glitches—they hit the business directly in terms of cost, trust, and continuity.

Financial Losses

A misconfigured AI could trigger fraudulent wire transfers, grant unauthorized discounts, or expose proprietary intellectual property (IP). According to IBM’s 2024 Cost of a Data Breach Report, the average breach now exceeds USD $4.9 million. For smaller businesses, even a fraction of that impact can be crippling. Attackers also know AI agents are always active. By hijacking a bot’s credentials, they can move funds or exfiltrate sensitive data faster than human monitoring can catch.

Reputation Damage

Reputation takes years to build but can unravel in days. If clients discover that an AI tool leaked their confidential data—contracts, financial records, or personal information—the fallout is immediate. Trust evaporates, relationships end, and competitors gain an advantage. For service providers, one AI mishap can cause customers to question the entire security posture of the business.

Operational Disruption

AI gone rogue can lock user accounts, delete files, or reconfigure systems in unintended ways. Unlike human errors, which are usually isolated events, AI mistakes scale rapidly. A single faulty command can cascade through cloud systems, CRM platforms, or financial software—creating hours or even days of downtime. In industries like healthcare, finance, or manufacturing, such outages aren’t just inconvenient—they can be life-threatening or financially devastating.

Regulatory Fines

Compliance regimes treat AI as an extension of the organization. Under Quebec’s Law 25, GDPR in Europe, and other frameworks, businesses must safeguard data no matter who—or what—accesses it. Regulators have already signalled they will hold companies accountable for AI misuse, with fines reaching into the millions. In some sectors, repeated violations can also result in the loss of operating licenses or contracts.

The Catastrophic Impact

For SMBs and enterprises alike, these risks combine into a dangerous equation: financial loss, reputational harm, operational chaos, and regulatory penalties—all at once. Without identity controls for AI, organizations are effectively gambling their future on trust in autonomous systems that were never designed to be trusted blindly. when Ottawa inevitably reintroduces privacy legislation.

Action Steps for Leaders

Addressing AI identity risks requires structured, repeatable processes. Leaders don’t need to boil the ocean, but they do need a disciplined roadmap.

1. Inventory AI Use

Start by creating a clear picture of where AI exists in your business. List official deployments—such as AI-powered customer service or IT automation—and uncover “shadow AI” that employees may have adopted without approval. A simple staff survey or review of application logs often reveals hidden usage. You can’t protect what you don’t know exists.

2. Define Governance Policies

Set ground rules before problems arise. Define what AI agents are allowed to do, which data they may access, and who is responsible for oversight. Governance should also address retention policies, vendor risk, and escalation procedures when AI behaviour looks suspicious. Without these policies, every deployment becomes an ad-hoc decision, increasing the chance of inconsistency and error.

3. Implement Identity & Access Controls

Once policies are clear, enforce them with technology. Apply Role-Based Access Control (RBAC) to restrict each AI agent to its minimum necessary privileges. Use Multi-Factor Authentication (MFA) and unique credentials to prevent unauthorized logins. Ensure all activity is logged so that misuse or errors can be traced back.

4. Audit Regularly

AI risks evolve quickly. Quarterly or even monthly reviews are now best practice. Frameworks like Continuous Threat Exposure Management (CTEM) allow businesses to test and validate whether controls are working in real time, rather than waiting for an annual audit.

5. Educate Staff

Employees remain the first line of defence. Training should explain the dangers of unauthorized AI use and emphasize safe practices when interacting with company-approved tools. Awareness reduces the likelihood of accidental data leakage and helps build a culture of security.

By following these steps, leaders create a controlled environment where AI agents enhance productivity without introducing unacceptable risks.

Subtle Tie to MSSP/MSP Services

Managing AI securely requires more than policies—it needs 24/7 monitoring, identity management, and rapid incident response. Many organizations, particularly SMBs, lack the in-house resources to manage these evolving challenges. IT teams are already stretched thin maintaining day-to-day systems, supporting employees, and handling routine cybersecurity needs. Adding AI identity governance and round-the-clock monitoring to their workload is often unrealistic.

This is where specialized Managed Security Service Providers (MSSPs) and Managed Service Providers (MSPs) play a critical role. Instead of expecting internal teams to shoulder everything, businesses can lean on external experts who already have the people, processes, and technology in place. These providers continuously refine defences across hundreds of clients, giving them visibility into new attack techniques long before a single organization might notice.

At the core of MSSP value is layered defence. AI-driven risks don’t exist in isolation—they intersect with phishing, credential theft, cloud misconfigurations, and insider threats. An MSSP can apply Zero Trust controls, ensuring AI agents only access what they should. They provide real-time monitoring, spotting anomalies in agent behaviour that might slip past standard IT tools. And they deliver continuous risk assessments, helping leaders understand whether AI deployments are strengthening or weakening their security posture.

Another advantage is the ability to scale expertise. AI-related incidents demand a mix of identity management knowledge, compliance expertise, and digital forensics. Most SMBs cannot hire or retain full-time specialists in each of these areas. MSSPs fill that gap, offering enterprise-grade defences at a fraction of the cost of building an internal program.

Most importantly, trusted security partners give businesses confidence to innovate. Rather than avoiding AI due to fear of missteps, companies can adopt these technologies knowing that governance, monitoring, and rapid response are built in. The result is agility without recklessness—innovation supported by a safety net.

Final Word

AI agents are not just another app. They are autonomous actors with access to sensitive systems, capable of making decisions at machine speed. Without proper identity controls, they pose one of the fastest-emerging risks in cybersecurity. Businesses that treat them casually—as if they were the same as a productivity plugin—risk exposing themselves to financial loss, reputational damage, and regulatory penalties.

But the picture is not all caution. With the right safeguards, AI agents can be transformative. They can reduce workloads, streamline service delivery, and improve decision-making. The key is to combine identity governance, continuous monitoring, and trusted partnerships. When businesses view AI through the same security lens as human employees—ensuring every action is verified, every identity is managed, and every behaviour is logged—they unlock the upside without absorbing unnecessary risk.

For leaders, the takeaway is simple: AI identity controls are not optional—they are foundational. Just as organizations once had to formalize email policies, cloud governance, or mobile device management, AI governance is the next natural evolution in digital security.

The businesses that act now will position themselves ahead of the curve. They will demonstrate to clients, regulators, and partners that they can embrace innovation responsibly. And they will reduce the likelihood of becoming tomorrow’s cautionary headline.

With proactive governance, layered defences, and expert support where needed, companies can confidently harness AI’s benefits while protecting their future. The choice is clear: treat AI as a trusted partner under strict controls—or risk letting it become the weakest link in your cybersecurity chain.

AI agents without identity controls create business risk. Secure them now to protect operations and client trust.

Featured links:

IBM Cost of a Data Breach Report 2024

AI agents and autonomous AI | Deloitte Insights

FAQ:

What makes AI agents different from traditional software?

AI agents don’t just follow static rules—they act autonomously, learn from data, and make decisions in real time. This flexibility boosts productivity but also makes them harder to govern compared to traditional apps.

Why do AI agents need identity and access controls?

Just like employees, AI agents can access sensitive systems and data. Without defined roles and restrictions, they may overstep, leak information, or cause compliance violations. Identity controls reduce these risks by enforcing accountability and least-privilege access.

What is “shadow AI,” and why is it risky?

Shadow AI refers to employees using unauthorized AI tools without IT approval. These tools may process sensitive information without proper safeguards, leading to data exposure, compliance issues, and business risk.

How can SMBs manage AI risks without overburdening IT teams?

SMBs can partner with managed security providers (MSSPs/MSPs). These partners offer 24/7 monitoring, non-human identity management, and rapid incident response—delivering enterprise-grade protection without the cost of a full internal team.

Our Cybersecurity Guarantee

“At Fusion Cyber Group, we align our interests with yours.“

Unlike many providers who profit from lengthy, expensive breach clean-ups, our goal is simple: stop threats before they start and stand with you if one ever gets through.

That’s why we offer a cybersecurity guarantee: in the very unlikely event that a breach gets through our multi-layered, 24/7 monitored defenses, we will handle all:

threat containment,

incident response,

remediation,

eradication,

and business recovery—at no cost to you.

Ready to strengthen your cybersecurity defenses? Contact us today for your FREE network assessment and take the first step towards safeguarding your business from cyber threats!